Computational Imaging Lab @ Cornell

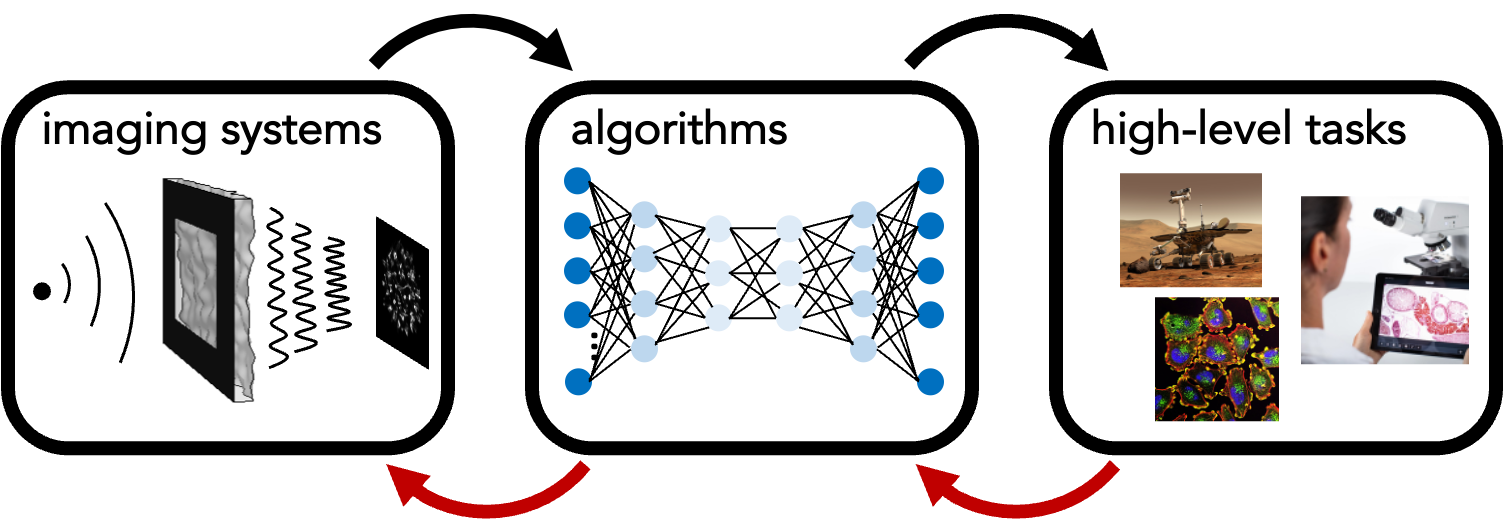

We combine ideas from machine learning, signal processing, optics, computer vision and physics to build better imaging systems (cameras, microscopes, and telescopes) through the co-design of optics, algorithms, and high-level tasks. Our aim is to design the next generation of smart, computational imagers that fuel scientific discovery, robotics, and medical diagnostics. We are particularly interested in:

- Differentiable optics - Can we use data and machine learning tools to design better cameras, microscopes, and telescopes?

- Physics-informed machine learning - How can we effectively combine our knowledge of imaging system physics with deep learning?

- Task-based imaging systems - What's the best camera or microscope for high-level tasks, such as robotics or medical diagnostics?

- Inverse problems and neural representations: Can we leverage neural priors to improve our imaging systems for microscopy and photography?

- Uncertainty quantification in imaging: How can we make imaging algorithms more trustworthy and robust for critical applications in science and medicine?

Check out our previous research projects and publications for more information!

Interested? Come join join us at Cornell!

news

| Feb 2026 | Kristina will be giving an invited talk at Stanford’s Optics Seminar Series, hosted by the Applied Physics department on March 9th! |

|---|---|

| Jul 2025 | Cassandra, Shamus, and Hasindu will present three posters at ICCP in Toronto next week! |

| Jul 2025 | Christian’s paper, Spectral DefocusCam: super-resolved hyperspectral imaging through defocus’ is accepted to ICCP! |