research

Some of our previous projects are summarized below.

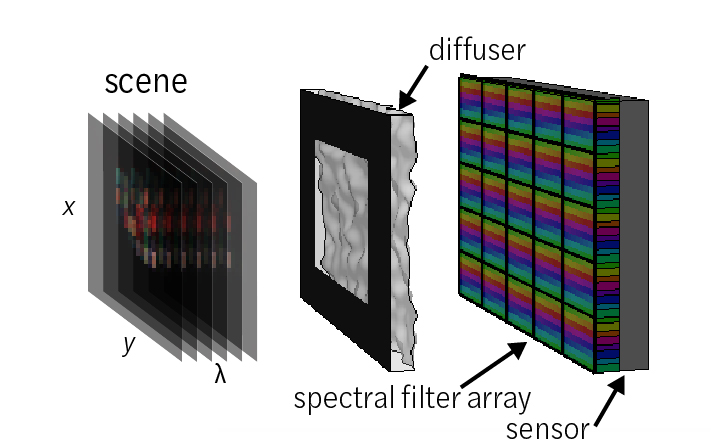

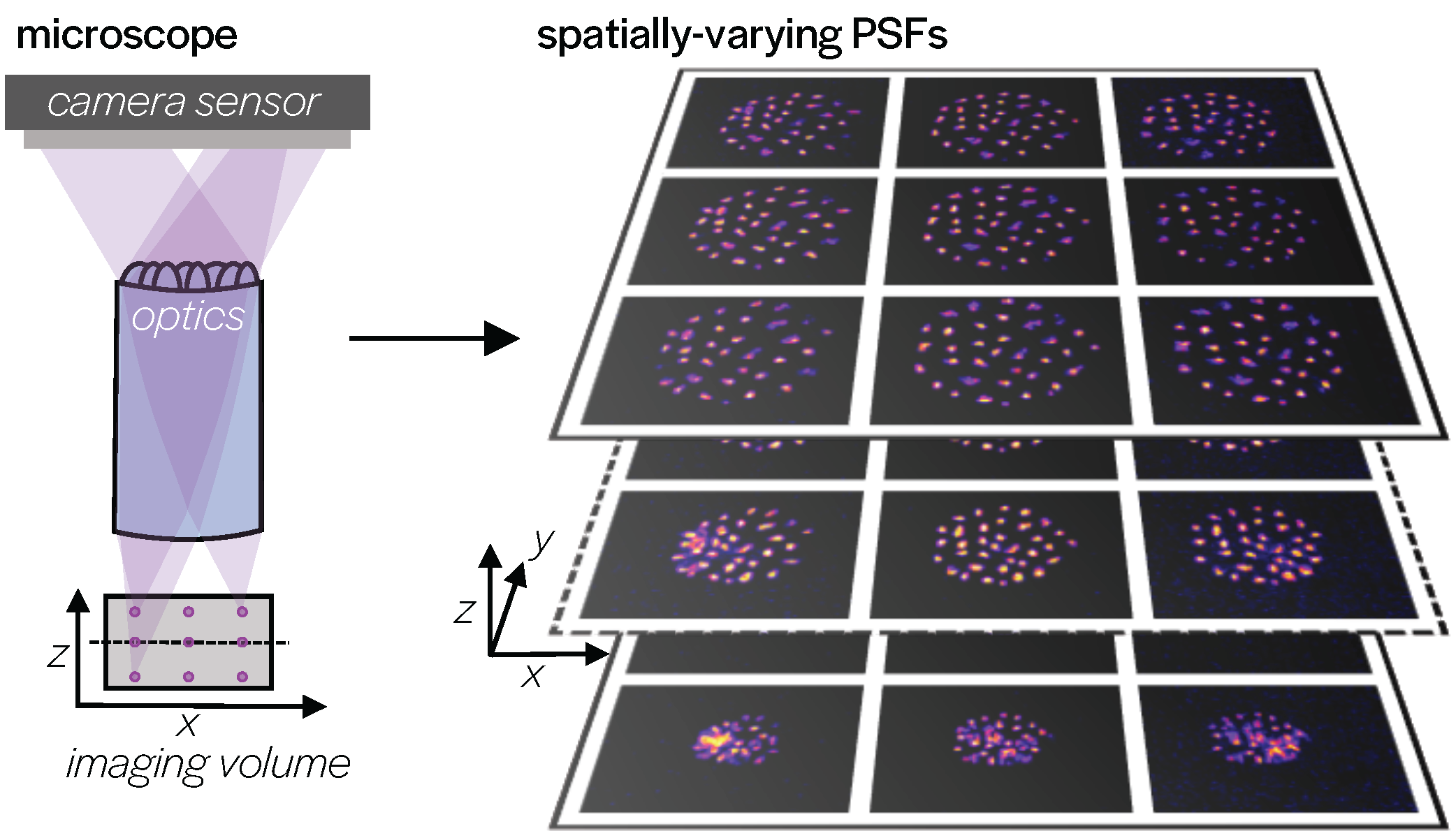

Compressive multidimensional imaging

Cameras are usually optimized to take sharp 2D images, but there's much more to the world than still images, for example, there's 3D information, multiple wavelengths of light, the polarization state of light, and temporal information. By co-designing the optics and algorithms, we can compress this additional information onto our 2D camera sensor, and then use algorithms to recover the encoded information. Check out the project links below to see how we can make a $5 hyperspectral camera, a miniature microscope that captures 3D volumes in a single shot, and a camera that captures an entire video in a single image.

Physics-informed machine learning

Deep learning can seem like an uninterpretable black box. In our work, we combine knowledge of imaging system physics to improve deep learning-based image reconstruction methods, making them faster, better, and more interpretable. Using physics-based machine learning, we can push the limits of our computational cameras. In the projects below, we show how these methods can allow cameras to see in the darkest conditions (under starlight with no moon present), can speed up algorithms for 3D microscopy by 1,000X, and enable compelling photography with cheap, lensless cameras.

Robust and trustworthy imaging

As we push our cameras, microscopes, and telescopes to and past their limits, can we still trust these devices enough for scientific and medical applications? Computational imagers rely on algorithms and priors to extract signals and insights from noisy, underdetermined, aberrated, and corrupted measurements. However, these algorithms can be prone to hallucinating, i.e., producing realistic-looking features that are not truly present in the sample. In our work, we leverage uncertainty quantification based on conformal prediction to quickly and accurately predict confidence intervals for recovered images. In the projects below, we show how to quickly and efficiently add uncertainty prediction to any imaging inverse problem, and how to use uncertainty in the loop to speed up adaptive microscopy. These projects are a step towards trustworthy and robust computational imagers.

Check out our recent papers for more details (see publications).